# Break the loop if we don't get a new frame. # Iterate while the capture is open, i.e. python computer-vision deep-learning image-annotation video-annotation annotations classification semantic-segmentation instance-segmentation Updated Python diffgram / diffgram Star 1.7k. # We can check wether the video capture has been opened Image Polygonal Annotation with Python (polygon, rectangle, circle, line, point and image-level flag annotation). # Start with the beginning state as 10 to indicate that the procedure has not started # You can INCREASE the value of speed to make the video SLOWER # use this if you made a mistake and need to start over. # press q to quit early, then the annotations are not saved, you should only # Press space to swap between states, you have to press space when the person # White circle means we are done, press d for that # Blue cicle means that the annotation haven't started This allows for multiple keys to be pressed during the annotations.

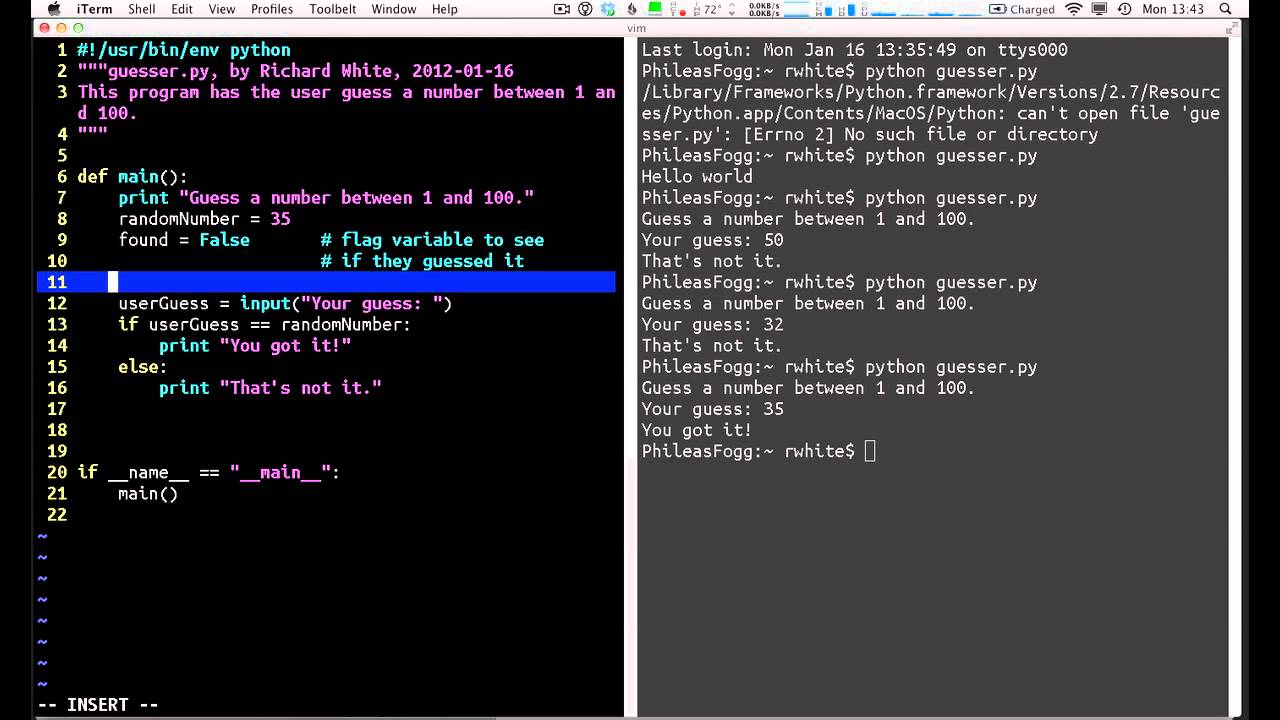

I also capture the key pressed with waitKey and then do something based on the output. Hopefully this will be useful for someone else later. I needed to see in the video what annotation I was assigning to each frame, so I made a small circle in the upper left corner of the video as I displayed it. I want to do this at maybe half the regular framerate of the video.ĮDIT: I used the solution provided by miindlek and decided to share a few things if someone runs into this. I have been googling around, and most of the stuff I have found is to annotate individual frames with a polygon.

Is there a tool in matlab that can do this? Otherwise matlab is not a restriction, python, C++ or any other language is fine. I want to be able to playback the avi file in matlab and then press space to switch between these two states and simultaneously add the state variable to an array giving the state for each frame in the video. To do that I need to annotate each frame in the videos with a 0 or 1, corresponding to "bad pose" and "good pose", i.e. I can get a skeleton of the human in the video but what I want to do is recognize a certain pose from this skeleton data. This change was made to improve the usability of type hints, especially when dealing with circular references.I have a bunch of videos and depthmaps showing human poses from the Microsoft Kinect. In Python 3.10 and later, annotations are treated as if from future import annotations were always in effect. Starting from Python 3.10, the behaviour of from future import annotations is enabled by default, meaning you don’t need to include this statement explicitly. Pass # No NameError here, since annotations are delayed With from future import annotations (Python 3.7 and later): from _future_ import annotations Pass # This will raise a NameError in Python 3.7 and earlier Without from future import annotations (Python 3.7 and earlier): def foo(bar: List): Here’s an example to illustrate the difference: This means you can use types defined later in the module, even if they are self-referential. When you include this statement at the beginning of your code, Python will delay the evaluation of type annotations until after the whole module has been parsed. The from future import annotations statement changes this behaviour. This can lead to circular dependency issues, especially when dealing with classes that refer to themselves in type annotations. This feature was introduced in Python 3.7 and significantly impacts how type hints are treated.īy default, in Python 3.7 and earlier, when you use type annotations in function signatures, the annotations themselves are evaluated immediately. In Python, the from _future_ import annotations statement changes the default behaviour of type annotations in function signatures.

0 kommentar(er)

0 kommentar(er)